UiPath Autopilot™ for testers

Introduction

Welcome to the first part of the UiPath Autopilot™ article series.

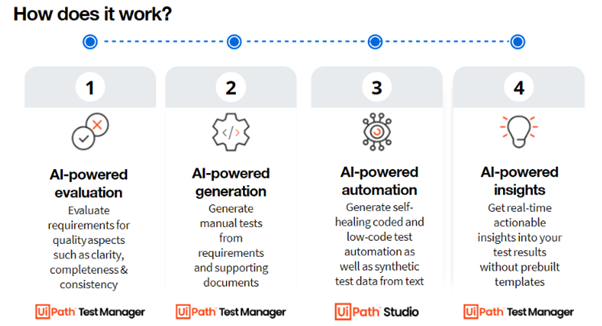

Autopilot has changed testing by giving testers the power of AI from start to finish, throughout the testing process. This includes evaluating requirements for quality aspects and generating manual tests from requirements. Also, it helps generate coded and low-code automations from these manual tests, leading to get actionable insights into test results.

This article focuses on the first two pillars of Autopilot, namely AI-powered evaluation and AI-powered generation. The remaining two pillars, AI-powered automation and AI-powered insights will be covered in the next article. Stay tuned to the UiPath Community blog for product tutorials.

We will choose to test the website: https://uibank.uipath.com/ to show the various AI-powered capabilities of Autopilot for testers. We will explore the different aspects one by one.

Let’s start by writing the requirements in natural language and then see how we can easily evaluate the requirements.

AI-powered evaluation

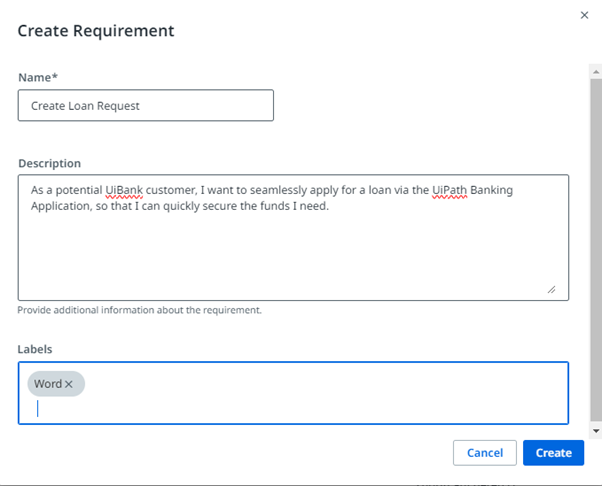

First, let's define the requirements in the Requirements section in Test Manager. For example: we create the requirement Create Loan Request by clicking on the Create Requirements button in the Requirements section.

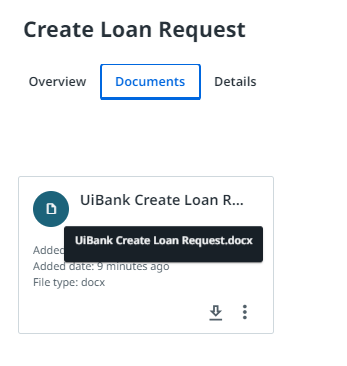

We have the option of specifying the details of the requirement and adding documents for additional context in the Documents tab of the requirement as shown below.

Now, let us see how we can evaluate the quality of this requirement. As shown below, the user just needs to click on the Evaluate Quality button to do this.

As can be seen above, we can add more supporting files (for Autopilot to better understand the requirements) if needed (we did not do this for the first step above as we had already added the detailed requirements file). The supporting file format can be in different formats like Word or Excel files, images, PDF files, text files, and BPMN diagrams. Next, we select the out-of-the box Multifaceted Audit prompt from the prompt library to instruct Autopilot to evaluate the requirement from diverse perspectives before hitting the Evaluate Quality button. We can also define our own prompt (set of instructions).

Once the evaluation is completed, we shall get the notification in the notification tab and we can see the results as we can see below.

As we can see above, Autopilot generated the ten suggestions as per our request and we can add any of these suggestions to the requirement. The added suggestions are directly added to the description of the requirement. In case you need more suggestions, you can also click the Suggest More button to get ten additional suggestions. If the suggestions do not align with what you are looking for, you can also refine the enhancement suggestions by clicking the Regenerate button.

AI-powered generation

Next, let us see how we can utilize the AI-powered generation feature to quickly generate the manual test cases from a requirement.

Above, the user just needs to select the Generate tests button and give the appropriate prompt (for the example above, I selected the Valid E2E Scenario Testing prompt, but I can add a prompt as well, e.g. “Please keep the test cases title limited to five words.” Or “Focus on realistic test scenarios to generate thirty test cases.” Autopilot takes a minute or two to generate test cases, depending on the number of test cases that you are generating.

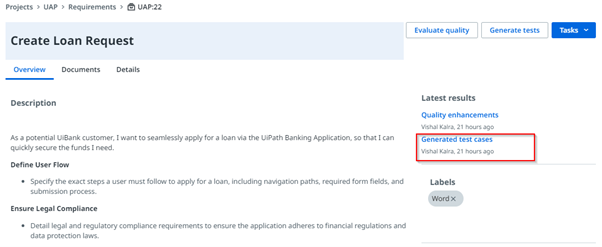

Once the test cases have been generated, the user can click the related notification and select the appropriate manual test case. Above, these test cases get linked to the requirement directly as well.

The user can also click the shortcut Generated test cases within the latest results section to navigate to the generated test cases.

The user can verify the test cases and can also click the Regenerate button in case they are not satisfied with the generated test case results.

Additional features Autopilot can also be used to generate test cases based on visual diagrams. How cool is that? Let’s see it in action below.

As we can see above, we have a visual representation of the requirement to calculate discounts. We are using this to generate the test case.

Once generated, we can see the generated test cases as follows:

We can also produce test cases in different languages depending on your requirements. i.e. they can be localized as per the user's needs.

Let us see this in action for the same use case.

Above, we generated ten test cases in German language. So, this feature can be used for localization requirements for your non-English-speaking user’s as well.

We can also create test cases from on transcript. In the following figure, we use the transcript discount calculation logic and requirements discussion between the two users as the base for the test case generation.

As we can see, Autopilot is able to generate test cases from the content of the document. How awesome is that?

Autopilot reshapes the testing process by evaluating the quality of requirements and also helping the testers generate a variety of test cases with simple language instructions. By leveraging AI-driven capabilities, Autopilot ensures faster, more accurate, and reliable testing by eliminating manual errors and optimizing workflows.

Stay tuned for the next article that explores the next two pillars.

Meanwhile, discover how Autopilot empowers testers with AI throughout the entire testing lifecycle. Elevate your testing efficiency with Autopilot for testers!

Topics:

AIArchitect , Applications Software Technology LLC (AST)

Get articles from automation experts in your inbox

SubscribeGet articles from automation experts in your inbox

Sign up today and we'll email you the newest articles every week.

Thank you for subscribing!

Thank you for subscribing! Each week, we'll send the best automation blog posts straight to your inbox.